Uncertainties in RF and Microwave Power Measurements

Introduction

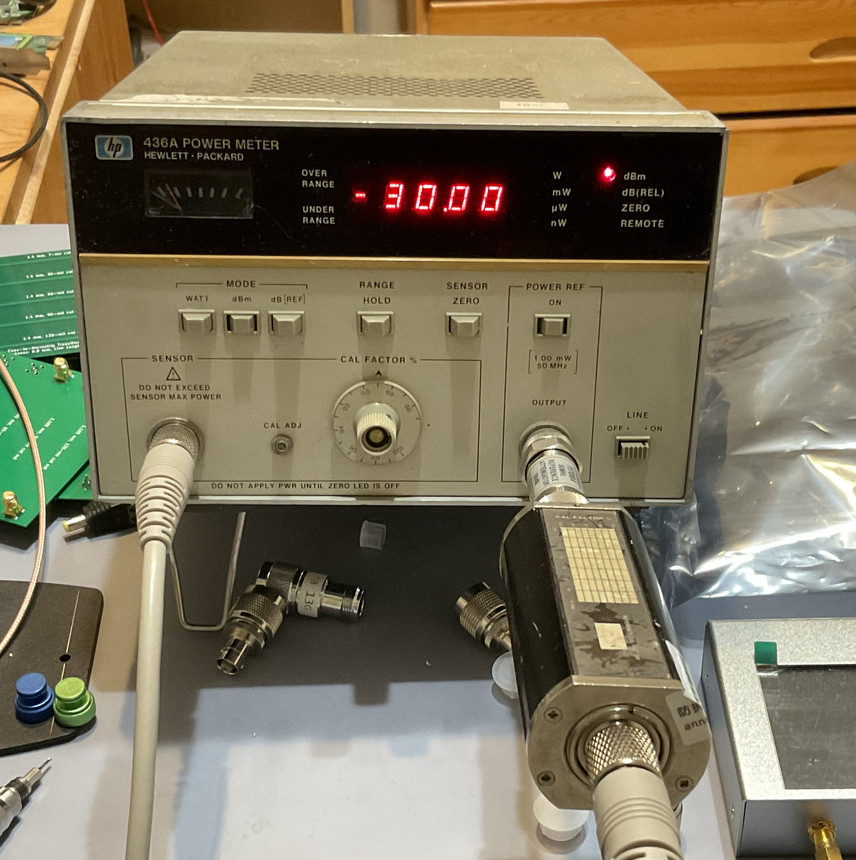

Recently, I’ve purchased a vintage Hewlett-Packard 436A RF power meter and a HP 8484A power sensor for testing a circuit I’m building. Since the measurements I’m trying to do is delicate, on the order of 0.1 dB, I need to estimate my measurements accurary and understanding the sources of uncertainties.

In this article, the main sources of uncertainties in RF and microwave power are explained.

This article belongs to the Tech Note sections of this blog, which is a section for Notes for my personal understanding and future reference about a technical subject. Not necessarily within the interests of all readers, and the quality may not be high enough. But hopefully still useful to some.

In particular, the rigorous and systematic method of calculating uncertainties according to the Guide to the Expression of Uncertainty in Measurement (GUM) is intentionally omitted due to its mathematical complexity.

Mismatch Uncertainty

An imperfect impedance matching and the infeasibility to measure the complex reflection coefficients create the biggest source of RF measurement uncertainties.

Sensor Mismatch Loss

Let’s consider an ideal RF signal generator, which is connected to

an ideal transmission line. An imperfect RF power sensor is attached

at its end. Since the RF power sensor is imperfect, its complex input

impedance

When the radio wave travels down the line and hits the sensor, instantly, a portion of the incident power is reflected back to the source.

The reduction of transmitted power to the sensor due to reflection is known as the mismatch loss, which is given by:

where

Here, knowing the complex form of

For example, let’s assume the sensor’s input impedance is almost matched

to the system impedance, say

If the signal generator is ideal, that would be the end of it - when the reflected power hits the signal generator, it’s dissipated inside the generator and no longer plays a role. Just add 0.16 dB to the measured power, and this measurement error is fully corrected. In fact, this error is absorbed into the Calibration Factor of the power sensor, thus it’s already compensated for.

Measurement Mismatch Loss

Unfortunately, in the real world, the signal generator is not ideal.

Due to physical imperfections of the RF connector and circuit

components - just like the sensor, it’s unable to fully maintain the

ideal system impedance. With a complex output impendace

When the reflected power from the sensor hits the signal generator, a portion of that reflected power is re-reflected again towards the sensor, and when it hits the sensor, a portion of power is subsequently reflected back to the source again, ad infinitum.

Worse, the reflected power can add constructively or destructively to the incident power depending on its phase shift. Thus, the measurement mismatch loss is not just the sum of two individual mismatch losses, but is instead given by:

Quite unexpectedly, we can show that the imperfect power sensor can sometimes receive more power than what would be received by a perfectly matched sensor, unlike what is suggested by the name mismatch loss. This happens when we’re especially unlucky and the reflected power has the correct phase to add constructively to the incident power. In a similar way, when the phase is aligned destructively, the received power in the system can be more than the sensor’s mismatch loss, and may create a significant error.

For example, a perfectly matched sensor has

This is easier to understand in the language of circuit theory, in this case,

the source,

Uncertainty

If the complex reflection coefficients

Unfortunately, this is often infeasible due to many practical difficulties.

For one, measure the sensor is easy, but

Without the knowledge of phase, the best we can do is correcting the

This is known as the Mismatch Uncertainty.

For example, a signal generator and a sensor with

Nevertheless, in real experiments, it’s extremely unlikely that a 180-degree

phase shift is encountered in every single data point, and one can argue

this uncertainty is overtly pessimistic. Indeed, sophisticated statistical

models are available for reducing this uncertainty solely based on an

assumption of

It’s also worth pointing out that because phase shift is a function of frequency (the complex impedance and reflection coefficient just keep rotating around the Smith chart). If the frequency span is large enough, both values will be encountered at some point in an experiment. When doing measurements in a seriously mismatched setup a spectrum analyzer with a tracking generator, this creates a “ripple” effect in the frequency response plots.

When the device-under-test has a significant impedance mismatch, measurement quality is visibly degraded. A good way to reduce mismatch uncertainty in this scenario is adding an attentuator in front of device.

Calibration Uncertainty

An imperfect sensor calibration is also a major source of measurement uncertainties.

Calibration Factor

When an RF power sensor is made, the factory makes an initial calibration to see how much the power readings deviate from an ideal sensor. Subsequently, this difference in percentage is printed onto the sensor as a Calibration Factor (CF) table.

Image by eBay vendor advancedtestequipment, fair use.

To help users to calculate a more realistic mismatch uncertainty rather than taking the datasheet’s maximum limits, the sensor’s reflection coefficients are often also measured simultaneously. Sometimes even the phase is available in a seperate paper report provided along with the meter.

This calibration corrects two errors. First, the mismatch loss of the

sensor itself. As explained previously, because of impedance mismatch, a small

portion of the source’s available power never enters the sensor. Next, the

effective efficiency (

Using the sensor in the picture as an example, at 1 GHz, the mismatch loss

is

Effective Efficiency

Just because the power enters the sensor doesn’t imply it’s actually metered by the sensing element, a small amount of power can get lost due to the lossy conductor, circuit components, or radiation. Moreover, due to the imperfections of the sensing element, its DC output is not exactly proportional to the incoming power, especially across the frequency spectrum.

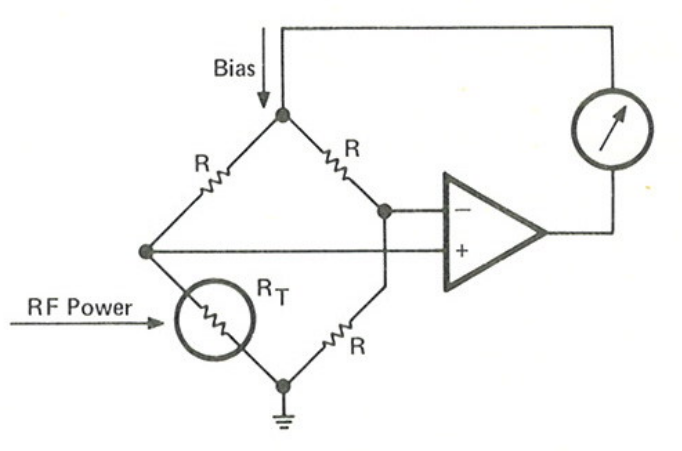

It’s called efficiency, because the original thermistor sensors operates

by the principle of RF/DC substitution - the incoming RF power is dissipated

in a resistor bridge circuit, while the sensor also dissipate its own DC power

in the resistor bridge. The system reaches equilibrium when the temperature

increase due to RF heating is the same as what would be caused by DC heating.

Thus,

For the thermocouple and diode sensors, common in modern times, however, the imperfect effective efficiency is mostly due to non-ideal characteristics of the sensing elements, for example, due to a diode’s deviation from the square law across frequencies. Thus, effective efficiency is defined as a ratio between its DC reading and the RF input, and not a true efficiency.

Uncertainty

In the ideal world, applying the Calibration Factor according to the RF signal frequency you’re measuring should completely correct all errors of a particular power sensor.

Unfortunately, a calibration lab is not magic, it encounters all the same uncertainties described in this article, albeit it controls them better. Thus, Calibration Factors themselves have associated uncertainties, and are documented in the sensor datasheet or your calibration report.

For example, this is the specification of the HP 8482A power sensor.

| Frequency | Sum of Uncertainties |

Probable Uncertainties |

|---|---|---|

| 100 kHz | 2.3 | 1.3 |

| 300 kHz | 2.2 | 1.2 |

| 1 MHz | 2.2 | 1.2 |

| 3 MHz | 2.2 | 1.2 |

| 10 MHz | 2.5 | 1.3 |

| 30 MHz | 2.6 | 1.4 |

| 100 MHz | 3.1 | 1.6 |

| 300 MHz | 3.1 | 1.6 |

| 1 GHz | 2.7 | 1.4 |

| 2 GHz | 2.7 | 1.4 |

| 4 GHz | 2.8 | 1.5 |

Sum of Uncertainties is the worst-case uncertainties by adding all together, while Probability Uncertainties are the statistically-more-likely Root Sum Square (RSS) uncertainties. See Appendix 1.

Some RF power sensors, especially thermistor sensors but also some modern sensors, are physically capable of achieving higher accuracy than the factory calibration uncertainty. In fact, off-the-shelf commercial sensors are frequently calibrated by national standard labs and used as inter-laboratory comparison standards.

For example, the HP 478A thermistor sensor has a factory calibration uncertainty of 1.4%, but at NIST, 0.3% to 0.7% is achievable.

Thus, you can improve a sensor’s calibration uncertainty by redoing the calibration at the best lab you can afford. For example, in the United States, the National Institute of Standard and Technology will never let you down… For a price around $5000. If you do choose this route, don’t forget to handle the sensor carefully in the future (e.g. before making any connection from your sensor to other devices, you should use a $3000 connector gauge - which is basically a specialized micrometer - to measure the connector’s pin depth to avoid physical damages by low-quality, out-of-spec connectors), and recalibrating it again frequently… Wear and tear from daily uses can change sensor characteristics.

Sensor Non-linearity

At high power levels, thermocouple sensors can have a slight non-linear response.

Some of the latest power sensors are calibrated are different power levels and use a multi-dimensional calibration factor table, and is applied under digital control, thus eliminating this error. But this is a problem for olders sensors, which are only calibrated at a single level and depends on sensor’s linearity to provide accurary results.

The HP 8484A, 8482A, and 8483A have a worst-case error of [-4%, +2%] (-0.18 dB, +0.09 dB) when operating between 10 dBm and 20 dBm, and negligible otherwise. Diode sensors are not effected. Always remember to read the manuals.

Reference Uncertainty

In an old-school thermistor power sensor that operates by principle of RF/DC substitution, the heat generated by the incoming RF power is constantly compared with the sensor’s DC power. This is called a closed-loop measurement, the sensor, once calibrated, does not need further adjustments. Component drifts are automatically compensated, because the drift has the same effect to the DC power as well.

But for the thermocouple and diode sensors, common in modern times, it’s an open-loop measurement. The measured power is whatever the sensing element reads, there is no inherent compensation for component drifts. Thus, RF power meters have a built-in 50 MHz reference signal generator. It uses Automatic Level Control to ensure the output power remains at 0 dBm (1 milliwatt), and is periodically recalibrated. Each time before the sensor is used, one first connects it to the power reference and fine-tunes the meter’s offset control to get a 0 dBm reading, thus ensuring accurary.

However, calibrations of the power reference itself are never perfect, thus introduces a small uncertainty to all measurements - typically 1%. For example, the HP 436A power meter’s reference is factory calibrated to 0.7% within the NIST reference, one-year accurary is 0.9% RSS and 1.2% worst case (0.052 dB).

When the sensor is connected to the reference for initial adjustment, the mismatch loss uncertainty also applies, but it’s in the range of 0.01 dB and can be neglected (50 MHz is a low frequency, and easy to maintain perfect impedance matching).

Instrumentation Uncertainty

After the sensor finshed its job of generating a DC voltage proportional to RF power, the final uncertainty is introduced by the imperfection of DC voltage measurement by the power meter - which is a seperate apparatus, connected to sensor via a cable.

This is the usually the smallest component of measurement uncertainties and is usually neglected. For the HP 436A power meter, it’s only 0.5% (0.02 dB).

There are also some miscellaneous uncertainties, consult your manuals for more details. On the HP 436A, there are:

-

Zero set uncertainty - only 1 count on most ranges, effectively a rounding error. But at the lowest range it can be 0.5% Full Scale (not a percentage of readings). The uncertainty is at the largest when measuring the smallest value in a range. When measuring a tiny 1 nanowatts on the 10 nanowatts range, this error is 0.21 dB.

-

Zero carry over uncertainty, 0.2% Full Scale, applies only when the meter is zeroed and later changed to a different scale. Theoretically this can be as large as 0.09 dB worst case, but Hewlett-Packard guarantees that the actual performance is much better to the extent that rezeroing the meter is not recommended.

Finally, there are some noise and drift in the sensor and the meter circuitry. This is on the order of nanowatts and picowatts, and not a concern unless one is measuring at the lowest range.

Example

An amplifier with an SWR of 2.0 is measured by the HP 8482A sensor on the HP 436A meter, the readings are 9 dBm and 20 dBm at 1 GHz. What is the measurement uncertainty?

Answer:

-

Mismatch Uncertainty: The source has

-

Calibration Uncertainty: 2.7% (0.115 dB) worst case, 1.4% RSS (0.073 dB) at 1 GHz.

-

Sensor Non-Linearity: 4% (0.18 dB).

-

Reference Uncertainty: 1.2% (0.052 dB).

-

Instrumentation Uncertainty: 0.5% (0.02 dB), negligible zero set and zero carry over (we’re on top of the 20 dBm range) uncertainties.

-

Noise and Drift: 40 nanowatts noise, 10 nanowatts one-hour drift.

| Source | Uncertainty |

|---|---|

| Mismatch | 0.07 dB, 1.6% |

| Calibration | 0.115 dB, 2.7% |

| Non-Linearity | 0.18 dB, 4.0% |

| Reference | 0.052 dB, 1.2% |

| Instrumentation | 0.02 dB, 0.5% |

| Noise and Drift | Negligible |

| Total | 0.437 dB, 10.0% (worst case) 0.222 dB, 5.25% (RSS) |

This is not a rigorous answer. For example, I simply took the largest uncertainties for calculating non-linearity, instead of using the upper and lower bounds separately. It’s only used to give a sense of scale.

Appendix 0: See Also

-

Importance and estimation of mismatch uncertainty for RF parameters in calibration laboratories by K. Patel et al., International Journal of Metrology and Quality Engineering

-

Fundamentals of RF and Microwave Power Measurements, Hewlett-Packard Application Note 64 (1977).

Appendix 1: Basic Definitions Related to Uncertainty

Some basic definitions related to uncertainty is given here. A detailed and rigorous discussion of uncertainty is out of scope of this article.

Error and Uncertainty

Traditionally, measurement quality in engineering is described in terms of accurary and error. Error is the difference between the measured value and its true value of a standard reference. But this definition, while common and continued to see uses in engineering, has been seen as theoretically flawed.

For example: even an inaccurate, low-quality instrument can sometimes register a value very close to its true value by sheer luck. Of course, the would-be experimenters can never know this without rechecking it on a better instrument, but still, it’s objectionable to say the nearly-correct measurement result has a large “error”. Furthermore, the true value is often an idealized quality and can never be actually known.

As a result, under many circumstances, the concept of error is now replaced by uncertainty. When we speak of uncertainty, we are describing the fact that we can never be sure of the measurement results due to imperfections in an experiment, there’s always a degree of doubt (they may or may not cause an actual error, but we just never know). Thus, a measurement result very close to its true value on a low-quality instrument has a small error, but a large uncertainty. This resolves the previous paradox.

Sum of Uncertainty and Root Sum Square Uncertainty

Traditionally, error or uncertainty is often calculated for the worst case scenario. For example, if a voltage regulator can have an error up to 5%, and an amplifier contributes another 5%, the sum of uncertainty of this instrument is 10.25%. Which is to say, when it’s manufactured by the worst possible voltage regulator from the supplier, and simultaneously the worst amplifier from another supplier, with an 5% error, and in the same direction of the resistor’s error, the instrument is approximately 10% accurate. This is a proven method and can be safe and reliable in terms of almost always meeting and even exceeding its specs.

However, one can criticize it as being overtly pessimistic. If we assume the variables are independent, the worst-case scenario is extremely unlikely to happen. Thus, an alternative method is using the Root Sum Square Uncertainty, or RSS Uncertainty, which gives a more realistic result.